Even-data duality: the quantum paradigm of agentic architectures

Between movement and trace, a new way to think about system intelligence.

Sommaire

I have always been fascinated by the wave-particle duality discovered in quantum physics. Isn’t it amazing that the same reality can be both energy in motion and observable matter?

This interest was born on the benches of my former engineering schools and digital technology, EFREI. And it has accompanied me from my first real-time developments to my current reflections on data and agentic architecture.

This summer of 2025, facing the sea, I felt this feeling again.

The light there drew changing reflections, oscillating between transparency and brilliance.

It then appeared to me that everything depends on the encounter between what illuminates and what receives.

This observation reminded me of the wave-particle duality and the nature of its observation. According to the way it is viewed, light indeed reveals itself alternately as wave or particle.

That day, I wanted to revisit these foundations. I decided to reread articles and to listen to an audiobook on quantum mechanics. I wanted to validate an intimate conviction based on an analogy.

The deep structure of our information systems also obeys a natural duality between movement and memory.

This parallel seems obvious to me in modern information systems. One can imagine that the event represents the wave, that is movement, flow, signal. And that the data coincides with the particle, the trace, the memory, and the form.

Understanding this duality is understanding how to build more responsive systems. It’s understanding how to make them adaptive and intelligent. It’s also being able to connect the physical world and the digital world in a continuous flow of interactions.

From sensor to data: when the wave becomes matter

In real-time embedded systems, a simple sensor can trigger a whole chain of actions.

Heat point detected, fighter jet nozzle at coordinates x : y : z.

Thus, an infrared sensor perceives a variation, the code interprets it. The event then becomes data.

The event (the wave) thus condenses into stable information. This guides the defense system in its action and enlightens the pilot’s decisions.

Editorial flows: from dispatch to signal

Later, in journalistic information systems, the logic remained the same. An AFP (French equivalent of Associated Press (AP)) dispatch, composed of raw text and analyzed according to certain criteria (coded analysis, no AI at the time), triggered an editorial event. This aimed to alert a journalist to a potential topic for the next morning’s edition.

Application integration and Business Intelligence: data as a trigger

I observed the same dynamics in the specialty of application integration and Business Intelligence, a field where data conditions the strategic decisions of companies.

Transformed data published on an application bus activated a triggering event for other systems, an update to data in various business applications. It was a true sign of life in an ecosystem that I had to interconnect.

Flow as living memory

In all these cases, the event becomes data. The flow freezes into memory. Transforming movement into an exploitable trace. This is the moment when the possible becomes real.

It is the digital equivalent of the passage from a state of possibility to a real state. In other words, the precise instant when the flow transforms into data, when intention becomes trace.

To clarify my point, imagine consumers browsing an e-commerce site. Their hesitations represent a flow of intentions. At the precise moment they confirm their cart, this fluid behavior freezes into structured data. They thus transform a simple purchasing possibility into a measurable transaction that the company can analyze.

The quantum mechanics of information

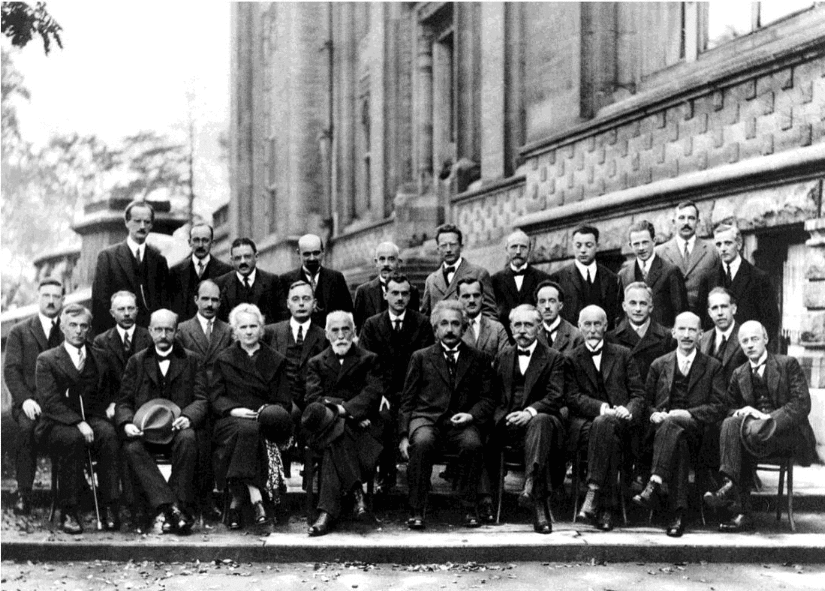

To specify the origin of this idea, during the Solvay Congress1, in 1927, Bohr and Heisenberg proposed a dizzying idea: reality only reveals itself at the moment it is observed2.

Previously, Werner Heisenberg3 stated the uncertainty principle which led to a funny exchange with Albert Einstein4.

In layman’s terms this principle can be summed up as follows: Heisenberg means that reality doesn’t really exist before it is observed, the particle is just a set of possibilities’ !

To make things more tangible to the mind, imagine a coin spinning on itself. As long as it spins, visually, it’s neither head nor tail. It’s a bit of both at once. It is only when you catch it (when you observe it) that it becomes either heads or tails. According to Heisenberg, microscopic particles behave somewhat like that. Before we look at them, they exist in multiple states at once.

Einstein, on the other hand, opposes this. He refuses the idea that reality depends on human observation by saying this phrase: I want to believe the moon is indeed there, even when I’m not looking at it5.

Participants of the 5th Solvay Congress held in October 1927 on the theme Electrons and Photons at the International Solvay Physics Institute in Leopold Park in Brussels. (Seventeen of the twenty-nine attendees are Nobel Prize winners.) 6

Ultimately, before observation, the world is nothing but a field of possibilities: a set of potentialities awaiting interaction.

This shifts from the possible to the actual, which physics calls wave packet reduction7. It constitutes the moment when a multitude of possibilities condenses into a single observed state. It finds an unexpected echo today in our architecture.

Observation and event: the tipping point

In the discourse of this article, undoubtedly Einstein is right, the information system does exist (servers, networks and infrastructures are very concrete).

But, like Heisenberg’s uncertainty principle, each event that passes through it acts as an observation. It freezes an instant of the flow; gives it shape and meaning.

Let’s transpose this to the digital system. Every time a user clicks on a website, a bank transaction is made, or a connected sensor takes a measurement, a transformation occurs. These observations of the system convert what was just a simple signal into concrete, measurable data.

Before this capture, information exists only as a signal among others. The act of observing, whether it is a detection algorithm, a streaming API, or an analysis engine, creates data as much as it reveals it.

Now, imagine for a moment a website before a user clicks. Several paths are possible. But as soon as the click happens, only one of these possibilities materializes and becomes real data, recorded in the system. This mechanic strangely resembles the principles of quantum physics. The act of observation, whether digital or physical, transforms the wave of possibilities into a particle of reality.

In the digital ecosystem, it is therefore observation (the click, the measurement) that gives life to data. Thus, without observing events, one cannot detect relevant new data.

Streaming: the circulation of the possible

Étienne Klein8 speaks of suspended reality , a world of potentialities9, and Niels Bohr10 of phenomena that exist only through the act of measurement11 shed new light on our understanding of streaming.

Streaming is this continuous movement that conveys the field of possibilities, without fixing them.

When the possible becomes memory

Let’s now talk about the streaming bus, this software infrastructure that continuously transports information.

In our modern architecture, it embodies the field of possibilities. Understand a space where events circulate waiting to be interpreted. And this, until a calculation or observation transforms them into persisted data.

In quantum physics, Werner Heisenberg12 then Niels Bohr13 showed that a particle has neither position nor defined state before being observed. It exists only through the act of measurement.

Similarly, in an information system, the event becomes real only when it is captured as the flow observed, takes shape in the data memory.

Towards the physics of the information system

Thus, our information systems orchestrate this permanent passage between flow and persistence, perception and memory as if each information system replayed in its own way the quantum theater of reality.

Matter in motion: when data becomes event again

But the reverse is just as true. In a modern architecture, data can become event again.

As soon as it feeds an AI model, a business rules engine, or an automated action, it comes back to life.

Thus, client data can trigger a personalized recommendation.

Active flow: from data to signal

Let’s take an example from distributed information systems and real-time data processing (data streaming or streaming data14).

When data is streamed via the Kafka platform15 as an example, it leaves its static storage. The platform transmits information in real time between different systems, and the data then becomes an active signal, capable of triggering other actions.

Static data gets moving again, just like matter that becomes wave again. It is the natural cycle of intelligent architecture: a permanent circulation between state and action, between memory and flow.

Intelligence as a living loop

Static data gets moving again, just like matter becomes wave again.

It is the natural cycle of intelligent architecture: a permanent circulation between state and action, between memory and flow.

The emergence of triggers in databases is a clear example: they embody the system’s ability to react in real time to an event, transforming a simple change into a conscious action of the information system.

Data is no longer a simple result; it becomes an actor, triggering new dynamics within the system.

Norbert Wiener16, with cybernetics, showed that intelligence emerges from the information loop: perceive, act, correct.

This vision is echoed today in our digital architecture: they link the flow of data to data storage, the movement of the world to its trace

And that is probably where the event-data duality lies: this permanent dialogue between the flow that carries life and the data that keeps its memory.

The intelligence of flows serving the three paths to performance Event-driven architecture thus embody the living loop between perception and memory.

By reconciling flow and trace, they offer the company the ability to differentiate itself on a major value axis. They also contribute to consolidating a second one, in the logic of the model developed by American researchers Michael Treacy and Fred Wiersema17.

This model, established in 1993, identified three major strategies for value creation. The first, operational excellence (producing efficiently and at lower cost). The second, customer intimacy (understanding and serving each customer as close as possible to their needs). The third is product superiority (innovating to offer the best market offer).

From stream-oriented architectures to agentic AI

From the duality stream table to autonomous agentic systems, each step illustrates how data and events respond to each other. They give life to truly intelligent architecture.

The stream table model: thinking of data in continuity

My reflection is in continuity with the works of Jay Kreps, Martin Kleppmann and Lutz Hühnken. They each explored the transformation of software architecture around the stream table paradigm. This last term designates the complementarity between the continuous event flow (stream) and the aggregated state they produce (table), two inseparable visions of the same data.

Thus, Jay Kreps showed how the distributed log becomes the unifying element between stream processing and persistent storage. This approach leads to rethinking data consistency at large scale18.

Martin Kleppmann, for his part, proposed designing databases as event streams, thus reversing the traditional storage logic19.

Lutz Hühnken, finally, deepened Martin Kleppmann’s idea by distinguishing the dual nature of events, both historical facts and derived states, which underpins the logic of event-driven architectures20.

My proposal extends this logic to the systemic level: that of an event-data duality where movement and memory, the world and its trace, interpenetrate to give rise to truly intelligent architectures.

When observation becomes action

The duality stream table teaches us that all data is born from movement, and that all movement ends up leaving a trace.

Behind this technical relationship hides a deeper tension: that of the event-data duality.

But what happens when this trace, in turn, begins to observe the movement that generated it?

Then, data ceases to be memory: it becomes gaze.

To observe an event is already to produce a new one because every observation transforms what it observes according to Heisenberg’s uncertainty principle: the observed reality depends on the observation.

The information system, once a simple receptacle, thus enters a living loop where perceiving, understanding, and acting become one.

In this cycle, knowledge does not accumulate, it regenerates.

And it is from this resonance between the flow and its awareness that agentic AI emerges, an intelligence where data reminds the world as much as it reinvents it.

From event-data duality to agentic intelligence

From the event-data duality or, in the architects’ language, stream table to the emergence of autonomous systems, each step illustrates the same dynamic: that of a world where flow and memory respond to each other.

The stream embodies movement of the event, real-time perception while the table represents stable memory, accumulated knowledge, the data.

The event gives life; the data gives memory. Together, they form the nervous system of agentic AI.

Agents, these software entities, sometimes tiny, perceive the world through events circulating in the system and feed on the information already stored and observed.

Each agent operates in a limited perimeter but with real autonomy: it listens, interprets, then decides or advises.

Some agents are reactive, they respond instantly to a specific signal, like a digital reflex.

Others are contextual, they consider data memory, time, or situation before acting.

And a few, more advanced, become cognitive: they plan, coordinate other agents, evaluate the effects of their decisions, and revise their strategy.

Together, they form a digital nervous system, where each perceived event can trigger an action, and each action leaves a trace.

It is from this dialogue that agentic AI emerges systems capable of observing and interpreting flows, acting on them, learning from their traces, and generating new ones. A continuous loop where perceiving, reasoning, acting, and learning merge to bring agentic architectures to life

When intelligence emerges from the relationship

Thus, intelligence no longer resides in a central model, but in the dynamics of interactions: a distributed, contextual, and living intelligence, born of the constant dialogue between flow and memory.

Agentic architecture is the operational implementation of the event-data duality. Agent-based architectures are the operational implementation of event–data duality. The one of events, fluid and moving, animated by flows carried by an event bus like Kafka.

And that of data, stable and structured, kept by lake houses21, warehouses22, and 23catalogs.

Between the two, agents stand as mediators: they listen and react, giving these systems the ability to perceive, understand and act.

Where traditional artificial intelligence observes, learns, then predicts from a frozen world of data, traces from the past, carefully stored and analyzed to build models.

It is in this back-and-forth that a new form of intelligence is born: a situated, contextual, autonomous intelligence capable of adapting to the signals of the world in real time.

A vision for tomorrow’s architects

Ultimately, in our architectures, data is the memory of the world. The event is its vital vibration. The two form the nervous system of intelligence of the modern information system. Intelligence in systems is no longer in the model, but in the flow that links it to the world.

The event-data duality reminds us that the value of data lies not only in what it describes, but in what it enables us to accomplish.

In a moving world, our systems must learn to perceive, interpret, and react in real time.

The challenge is no longer just mastering flows but making them the foundation of intelligence capable of acting.

This is the whole meaning of the agentic era: making data memory and event movement dialogue to build truly living systems.

Quantum physics and data architecture share fundamental principles that deserve to be explored. That is to say a world where systems no longer just calculate but understand and interact with their environment.

I invite, on this subject, architects, engineers, and curious minds of digital technology to reflect on this question:

How do your systems transform world events into data, and your data into new events? What if this loop became the very foundation of your Agentic AI architecture?

Acknowledgements and personal note

This reflection was born from my journey at EFREI my engineering school, nurtured by my years at Nexworld, a former consulting firm, now integrated into Onepoint. It now continues with enthusiasts who relentlessly explore data and agentic architecture.

I want to thank the one who inspired me: Jean Klein (member of the Institute of Nanoscience’s of Paris and physics professor at EFREI, passed away in 2023). He introduced me to quantum physics and the works of Planck, Einstein, Bohr, Heisenberg, Schrödinger, and Dirac.

A friendly nod to Serge Bouvet for his demanding writing and the care taken to convey accurate information.

And above all, thanks to my wife. A great reader and guardian of our library’s temple, she devoted her time to helping me catch up on French, literature, and philosophy classes. I had happily skipped them to better attend math and physics classes.

She supported and relieved me in family life with our young children. And this, during all those years when I returned too late from my clients’ places.

My head was full of data and my eyes full of new ways to make it travel. Thanks to her, I was able to find time to reflect on fundamentals and observations. They led me to share here my conviction.

So, you may lack letters but not wonder in front of the beauty of equations nor in front of all they make possible in the digital world around us.

It is within the Onepoint teams, driven by the same passion for data platforms, event-driven architecture, artificial intelligence and Agentic AI, that I continue today my journey.

Références

1. Don Howard, « Revisiting the Einstein-Bohr Dialogue », 3.nd.edu, page 19, 41 pages, 2007.↩︎

2. Bohr, 1935, Physical Review↩︎

3. Werner Heisenberg (1901-1976), German physicist, formulated the uncertainty principle in 1927, the cornerstone of quantum mechanic.↩︎

4. Don Howard, « Revisiting the Einstein-Bohr Dialogue », 3.nd.edu, page 1, 41 pages, 2007.↩︎

5. D. Song, « Einstein’s Moon », ufn.ru, Uspekhi Fizicheskikh Nauk, Russian Academy of Sciences, page 1, 2 pages, 2012.↩︎

6. Photographie Creative Common Wikipedia, Photograph by Benjamin Couprie, Institut International de Physique Solvay, Brussels, Belgium. ↩︎

7. On Gravity’s role in Quantum State Reduction, springer.com, Volume 28, pages 581–600, May 1996.↩︎

8. Physicist and philosopher of science Étienne Klein (CEA, French equivalent of DOE its agences) explores how physics questions our relationship with reality, particularly through quantum mechanics, time, and scientific language.↩︎

9. Le Monde selon Étienne Klein (France Culture, 2012–2014)↩︎

10. Niels Bohr (1885-1962), Danish physicist and pioneer of quantum thinking, saw the act of observation not as a simple measurement, but as a moment when reality manifests itself: an encounter between the world and the gaze.↩︎

11. Physical Review, vol. 48, 1935 ↩︎

12. « The Uncertainty Principle », stanford.edu, 2.2 Heisenberg’s argument, Stanford Encyclopedia of Philosophy, Oct 8, 2001.↩︎

13. Ibid, 5. Alternative measures of uncertainty.↩︎

14. « Kafka, pierre angulaire des Architectures Fast Data ? », groupeonepoint.com, 2019.↩︎

15. Stéphane Chaillon, Frederick Miszewski, Laurent Rossaert, « Stream processing : comprendre les nouveaux enjeux des données diffusées en continu», groupeonepoint.com, 2019.↩︎

16. American mathematician, founder of cybernetics — the science of control and communication in systems. He was one of the first to draw parallels between the functioning of living organisms and machines, demonstrating that the exchange of information is at the heart of any stable organization.↩︎

17. Michael Treacy and Fred Wiersema, « Customer Intimacy and Other Value Disciplines », hbr.org, 1993.↩︎

18. Jay Kreps, « The Log: What Every Software Engineer Should Know About Real-Time Data », engineering.linkedin.com, 2013↩︎

19. Martin Kleppmann, « Turning the Database Inside Out », martin.kleppmann.com, 2015.↩︎

20. Lutz Hühnken, « The Dual Nature of Events in Event-Driven Architecture », martin.kleppmann.com, 04 Mar 2015.↩︎

21. Lakehouses : A platform where all of an organization’s data, whether raw or structured, is collected. It is a kind of memory lake where traces of the flow are deposited before being analyzed.↩︎

22. Warehouse (data warehouse) : A more orderly and rigid space, where data is classified, verified, and ready to be used to guide decisions (the organized memory of the company).↩︎

23. Data catalog : A directory that lets you know what data exists, where it is located, and what it is used for. It is the compass that connects the living flow of events to the stable memory of information.↩︎